¶ What are inverse problems?

Surface-sensitive scattering experiments and reflectometry using neutrons and X-rays are established and unique measurement methods for determining characteristic and structural material properties. Due to the quality of neutron and X-ray radiation at modern large-scale research facilities, real-time experiments with high temporal resolution can be carried out in situ, e.g. to investigate kinetic processes during sample production or the effects of external influences. However, the information obtained in this way is usually not only contained in comparatively large amounts of data, but is also encoded in a reciprocal data structure. Conclusions about the real, structural properties of the samples under investigation are not unambiguous due to the measurement principle used: during the measurement, phase information is lost, which is why a simple inversion, e.g. using inverse Fourier transformation, is not possible. This inverse problem usually makes data analysis extremely complex, time-consuming and not trivially unambiguous. Overcoming the challenging data analysis is of central importance in order to fully exploit the extreme potential of reciprocal measurement methods and to make them accessible to a broad scientific user spectrum.

¶ Task areas

Definition of Inverse Problems and Preparation of Training Data

We prepare high-quality data that our artificial intelligence (AI) tools need to learn and work effectively. This involves collecting experimental data from cutting-edge research facilities and creating synthetic data using advanced simulations. These datasets are tailored to represent real-world scientific problems, such as studying thin films used in electronics, analyzing nanoparticle structures, and examining advanced materials for energy applications.

We also ensure that all datasets follow international standards for FAIR principles—making them Findable, Accessible, Interoperable, and Reusable. This means the data will be well-documented and easily shared with other scientists worldwide. By combining experimental and synthetic data, we provide a comprehensive training ground for the AI models, enabling them to handle diverse and complex challenges. This work package lays the groundwork for the rest of the project by ensuring that the data is accurate, diverse, and useful across different fields of research.

Development of Invertible Neural Networks (INNs)

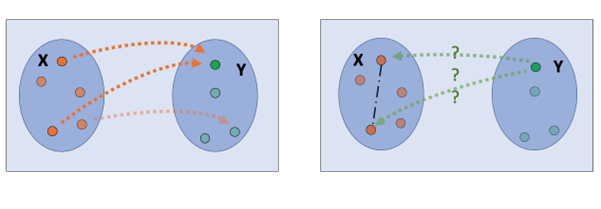

We create advanced AI models known as invertible neural networks (INNs). These models are designed to solve complex scientific challenges by working backward from experimental data to reveal hidden patterns or properties. For example, INNs can help scientists analyze the structure of materials at the nanoscale or understand the behavior of physical systems in high-energy experiments. What makes INNs special is their ability to handle uncertainty in the data, which is often noisy or incomplete in real-world research.

Another key feature of these models is their transparency. Unlike many AI systems that act like "black boxes," our INNs explain how they arrive at their conclusions. This not only builds trust but also helps researchers uncover new scientific insights. We are also focusing on making these models robust, so they perform reliably even when faced with unexpected data or unusual scenarios. Additionally, we are designing these systems to incorporate prior knowledge, such as data from real-space imaging techniques, to improve their accuracy and reduce ambiguity in their predictions.

Framework Design and Development

We focuse on translating the developed advanced AI tools into a user-friendly software platform. The goal is to make these tools accessible to researchers with varying levels of technical expertise. The platform will include a web-based interface that is simple and intuitive to use, as well as an application programming interface (API) that allows scientists to integrate the tools into their own workflows.

Flexibility is a key feature of this platform. Researchers will be able to customize the tools to meet their specific needs, whether they are working on materials science, particle physics, or another field. To ensure widespread adoption, we are designing the platform as open-source software. This means it will be freely available for anyone to use, modify, and share. By creating a robust and adaptable platform, this work package bridges the gap between advanced AI technologies and the everyday needs of scientists, enabling them to tackle complex problems more efficiently.

Quality Assurance

We rigorously test every component to ensure it meets the highest standards. This includes testing our AI models with real-world experimental data as well as synthetic data that mimics challenging scenarios. For example, we evaluate how well the models perform when analyzing noisy or incomplete datasets, which are common in scientific research.

We also test the speed and efficiency of the software to ensure it can handle large datasets and complex calculations in a timely manner. Additionally, we focus on making the tools robust, so they provide reliable results even under difficult conditions. By addressing these challenges, we build confidence in the AI tools and ensure they are ready for deployment in real-world research. This work package is critical for ensuring that the tools we develop are not only innovative but also practical and reliable for scientists.

Deployment in Research Institutions

The developed tools and software are deployed in research facilities and integrated into existing workflows. We are collaborating closely with our partners, including leading research institutions, to test the tools with real experimental data. For example, we will use data from experiments on thin films, nanoparticle arrangements, and advanced imaging techniques to validate the tools in real-world settings.

We are also working on strategies to share the tools widely. This includes making the software available as open-source, providing containerized applications for easy installation, and offering cloud-based services. These deployment methods ensure that researchers across the world can access and use the tools, regardless of their resources or technical expertise. Additionally, we are designing the software to fit seamlessly into the diverse data pipelines and workflows used by different research institutions. This ensures the tools are not only powerful but also practical and easy to adopt.

Knowledge Transfer

We create comprehensive documentation to guide researchers in using the tools effectively, from basic setup to advanced applications. This documentation will evolve throughout the project, incorporating feedback and lessons learned.

In addition to written guides, we are organizing workshops, training sessions, and educational programs to teach researchers how to use the tools. These programs will target not only the project partners but also the broader scientific community. We also plan to share our results through scientific publications, presentations at conferences, and outreach events, raising awareness about the project and its achievements.

By building a global community of researchers who can use, contribute to, and benefit from these tools, we ensure the project’s long-term impact. This includes collaborations with universities to incorporate the tools into educational curricula, ensuring that the next generation of scientists is equipped to use cutting-edge AI technologies in their work. Through these efforts, we aim to make the project’s innovations accessible, impactful, and sustainable for years to come.